Newsletter Subscribe

Enter your email address below and subscribe to our newsletter

It’s 3 AM. You’ve been woken up by a strange new ache, a peculiar twitch, or a worrying cough that sounds suspiciously like a seal barking. Your doctor’s office is closed, of course, because doctors, unlike mysterious ailments, believe in sleeping. So, you turn to your trusty computer and type your symptoms into one of those newfangled AIArtificial Intelligence (AI) is basically when computers get smart—really smart. Imagine if your c... More health bots.

In seconds, it spits out a diagnosis that sounds incredibly official, complete with complicated medical terms. You feel a wave of relief. Or maybe terror. Either way, you have an answer.

But hold on. Before you start planning your new diet of only kale and lichen based on a robot’s advice, let’s have a little chat. Relying on an AI for a diagnosis is a bit like asking your toaster for financial advice. It might sound confident, but it has no real understanding of your life, your health, or why you shouldn’t invest your retirement fund in artisanal bread. These tools can be helpful starting points, but they can also be dangerously, hilariously, and sometimes terrifyingly wrong.

Think of an AI health bot as a librarian on super-speed. It has read virtually every medical book, study, and article ever published online. When you ask it a question, it zips through that massive library and pieces together an answer based on patterns it has seen.

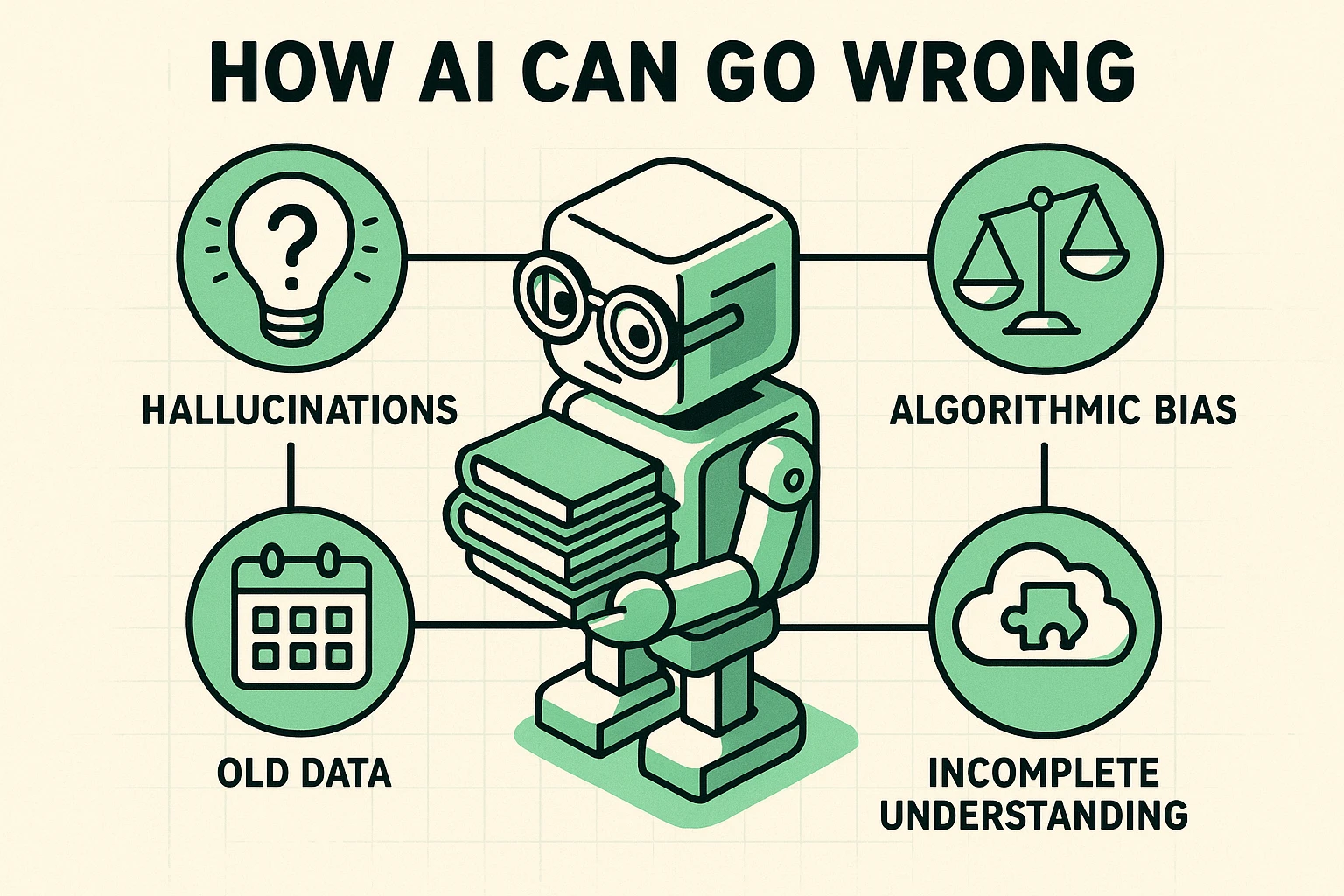

It sounds impressive, and it is! But here’s the catch: the librarian hasn’t understood a single word it read. It can’t think critically, it has no common sense, and it definitely doesn’t have a medical degree. It’s just a world-class pattern-matcher. This leads to a few big problems.

You might see websites bragging that their symptom checkers are “over 80% accurate.” That sounds great until you dig a little deeper. Often, that number refers to correctly identifying very common conditions, like the flu. When it comes to more complex or rare issues, one major study found their effectiveness dropped to just one-third. That’s not an A-plus for effort.

Here are the main reasons why “Dr. AI” sometimes fails its exams.

Have you ever met someone who speaks with absolute, unshakable confidence, even when they are completely making things up? That’s an AI “hallucination.” A recent study from Mount Sinai found that AI chatbots, when presented with medical misinformation, would not only repeat it but often embellish it with fake, official-sounding citations.

The AI wants to be helpful so badly that if it doesn’t know the answer, it will simply invent one. It’s the digital equivalent of your nephew confidently giving you directions in a city he’s never visited. He sounds convincing, but you’re still going to end up in a cul-de-sac staring at a bewildered squirrel.

AI learns from the data it’s given. If that data is mostly about one type of person—say, 40-year-old men—its advice might be completely wrong for an 80-year-old woman with a different health history. This is called “algorithmic bias.”

The AI isn’t being mean; it just doesn’t know what it doesn’t know. It’s like a cookbook that only has recipes for meatloaf. If you’re looking for a salad, it’s not going to be much help. Your unique health needs, age, and background might not be properly represented in the AI’s “library.”

Medicine is constantly changing. A breakthrough treatment from last year could make advice from three years ago obsolete or even dangerous. Many AI tools are trained on data that is months or even years old. They might confidently recommend a medication or procedure that doctors no longer consider safe or effective.

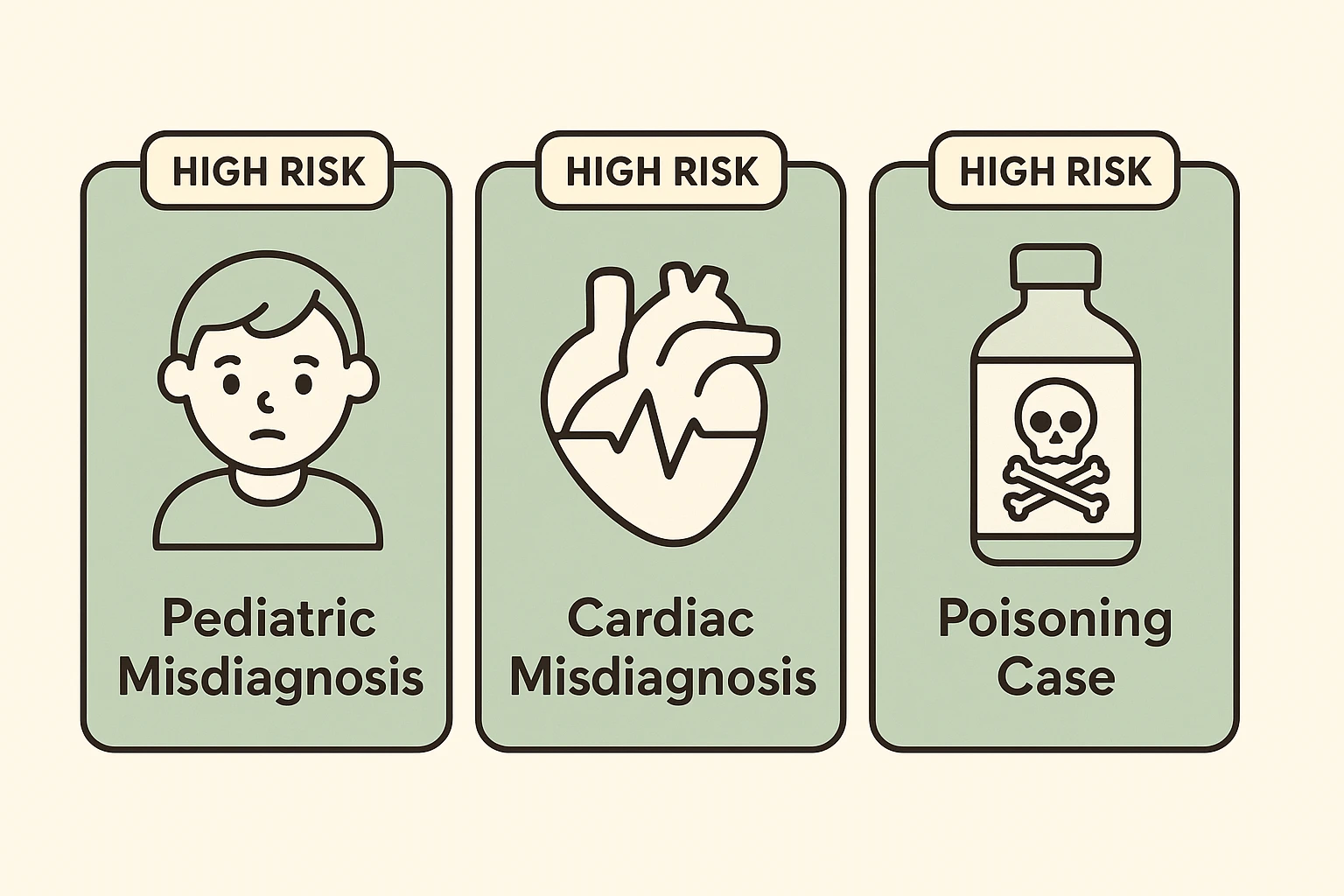

This isn’t just a theoretical problem. The risks are very real. Researchers have documented alarming cases where AI symptom checkers have made critical errors, including:

These aren’t harmless mistakes; they’re digital misdiagnoses with potentially devastating consequences. Trusting an algorithmAn algorithm is a set of steps a computer follows to solve a problem or complete a task. It’s more... More with your health without a second opinion is a gamble you can’t afford to take.

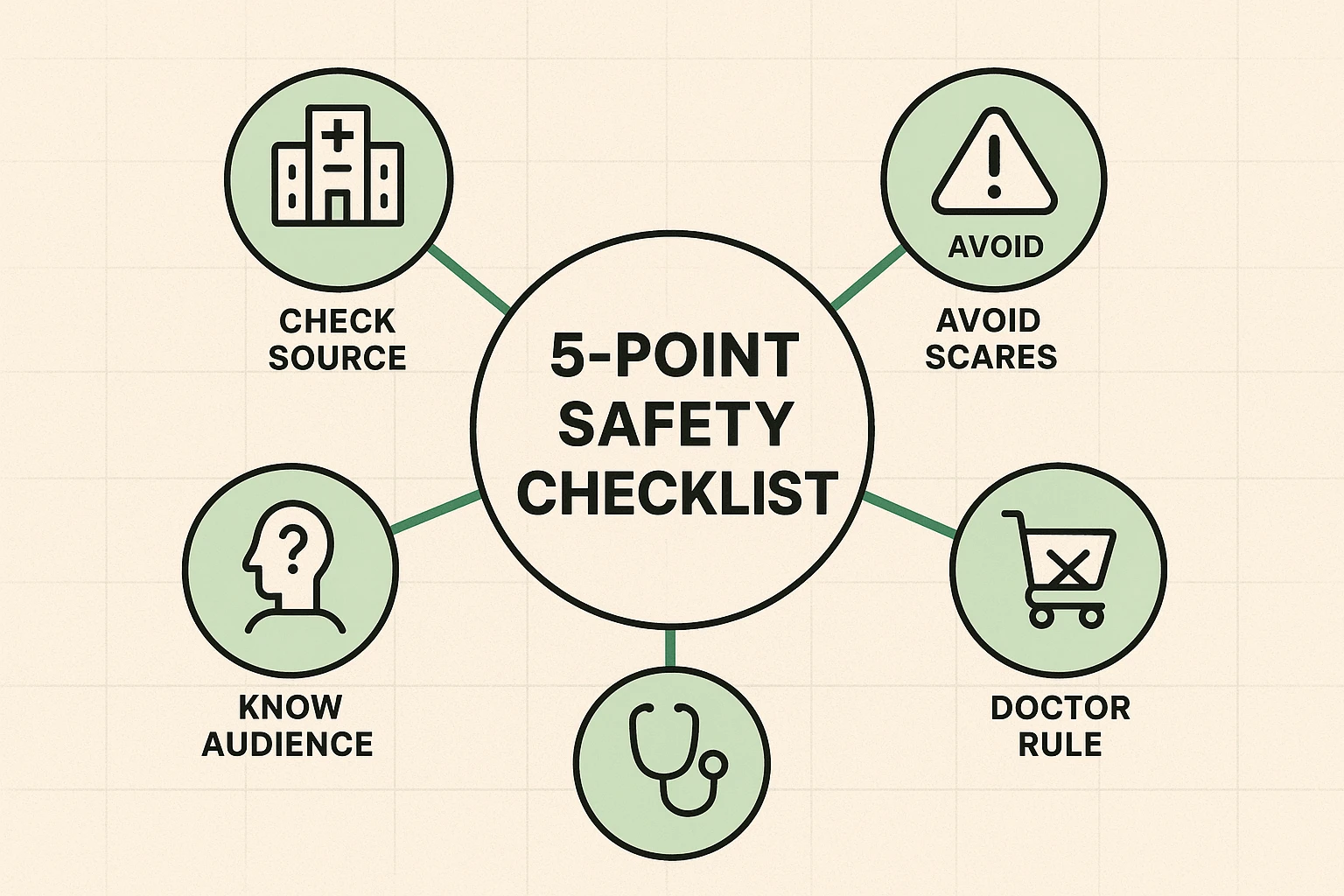

So, should you unplug your computer and never GoogleGoogle is a multinational technology company known for its internet-related products and services, i... More a symptom again? Not at all! Technology can be a fantastic tool for learning and becoming more informed about your health. You just need to be the smart, skeptical human in the relationship.

Use this five-point checklist to keep yourself safe.

AI health bots and symptom checkers can be a great first step. They can help you learn the right vocabulary to describe your symptoms and give you a list of questions to ask your doctor.

Use them to gather information, not to get a diagnosis. Think of it as preparing for a trip. You might look up maps and read travel guides online, but you’d still want to talk to someone who’s actually been there before you book your flight.

Your doctor is the expert who has been there. They know you, your history, and the nuances that no algorithm can ever understand. The smartest way to use technology is as a bridge to a better conversation with a real, live healthcare professional.

Not necessarily “bad,” but they are limited. They are best used for educational purposes to understand possibilities, not for a final diagnosis. The danger comes from treating their output as a medical fact without consulting a professional.

This can be a good use case, but with caution. An AI can be helpful for asking general questions like, “Should this medication be taken with food?” However, always cross-reference the information with the official pharmacy printout or by calling your pharmacist. For questions about side effects or interactions with other drugs you’re taking, only your doctor or pharmacist can give you safe advice.

That’s great! It can reinforce your doctor’s guidance. However, don’t let it create a false sense of security. Just because the AI was right once doesn’t mean it will be right the next time. The rule remains the same: always let your doctor have the final say on any health decisions.